Runtime

The orchestration engine for distributed AI

Coordinate, schedule, and manage AI workloads across heterogeneous hardware—securely and at scale.

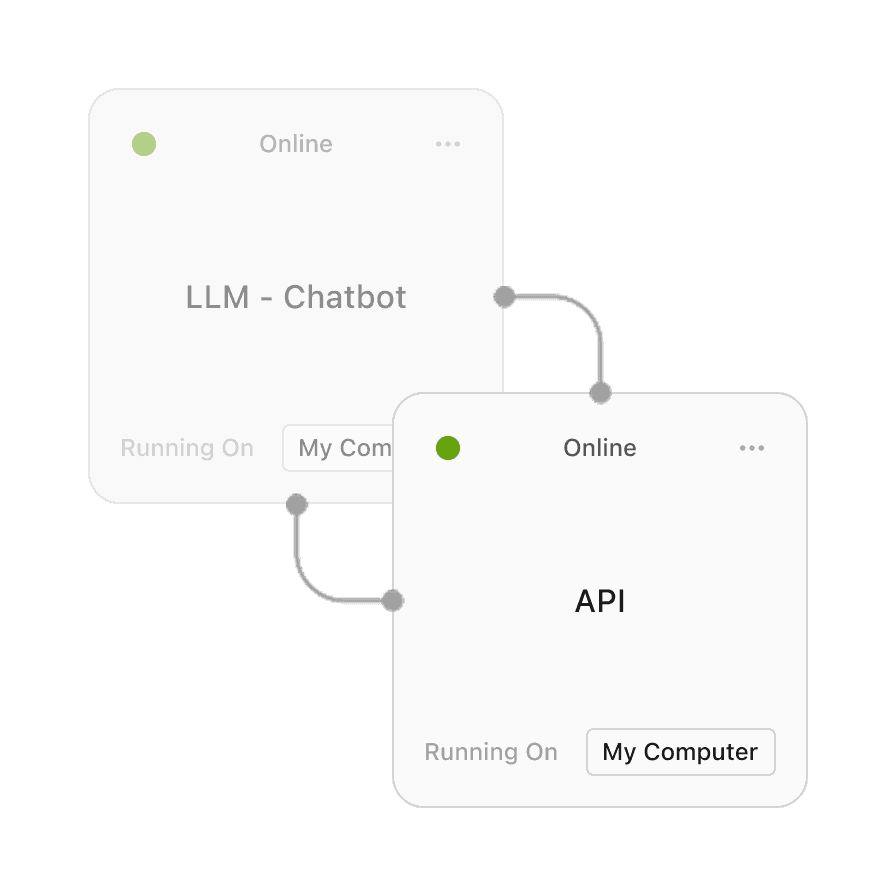

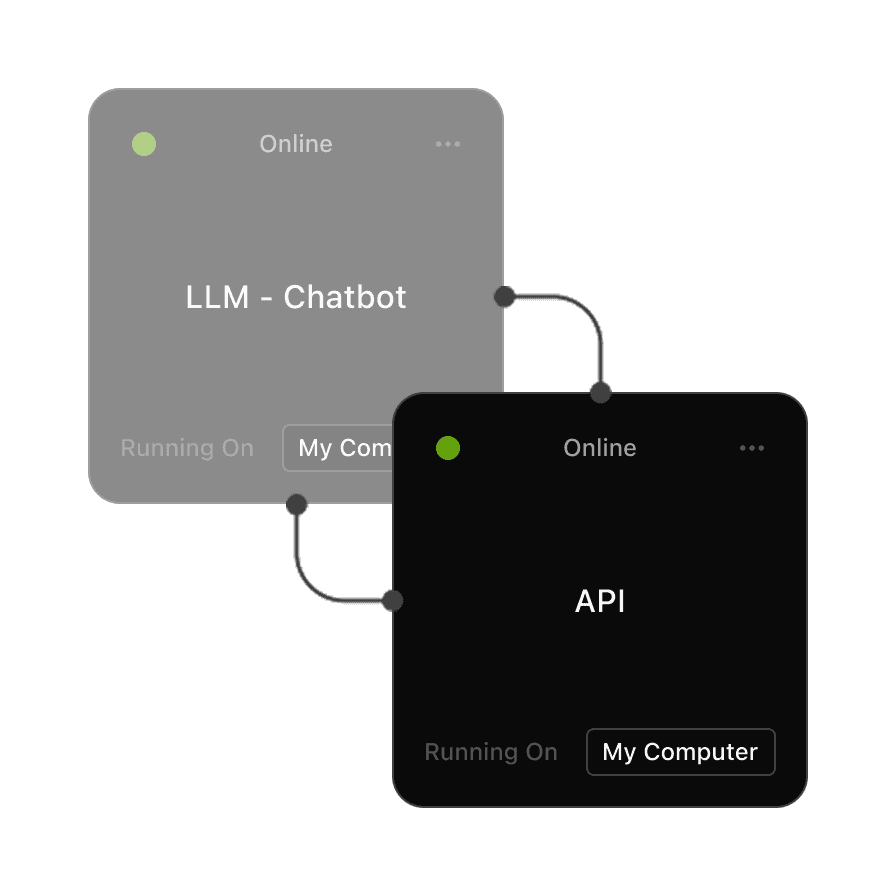

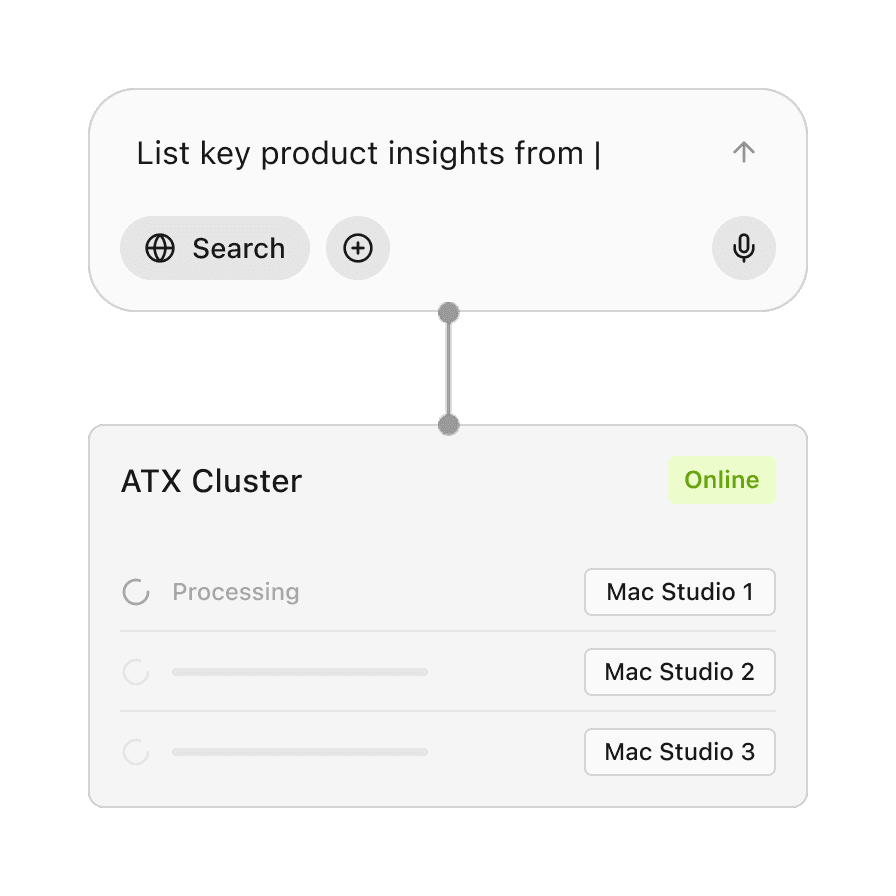

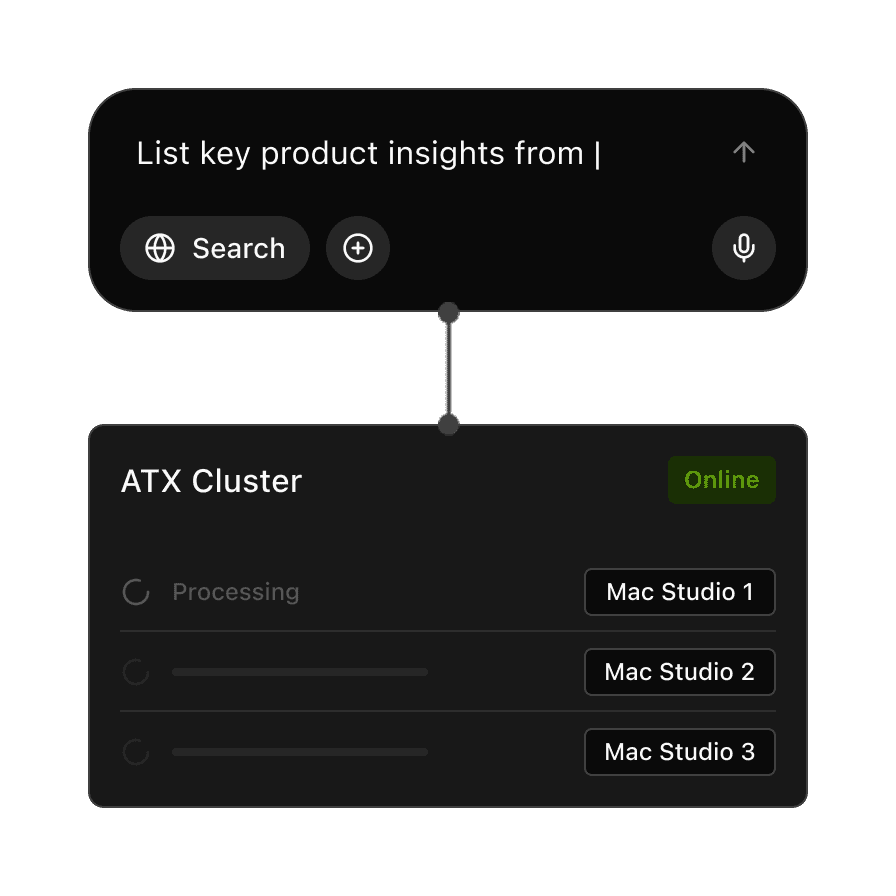

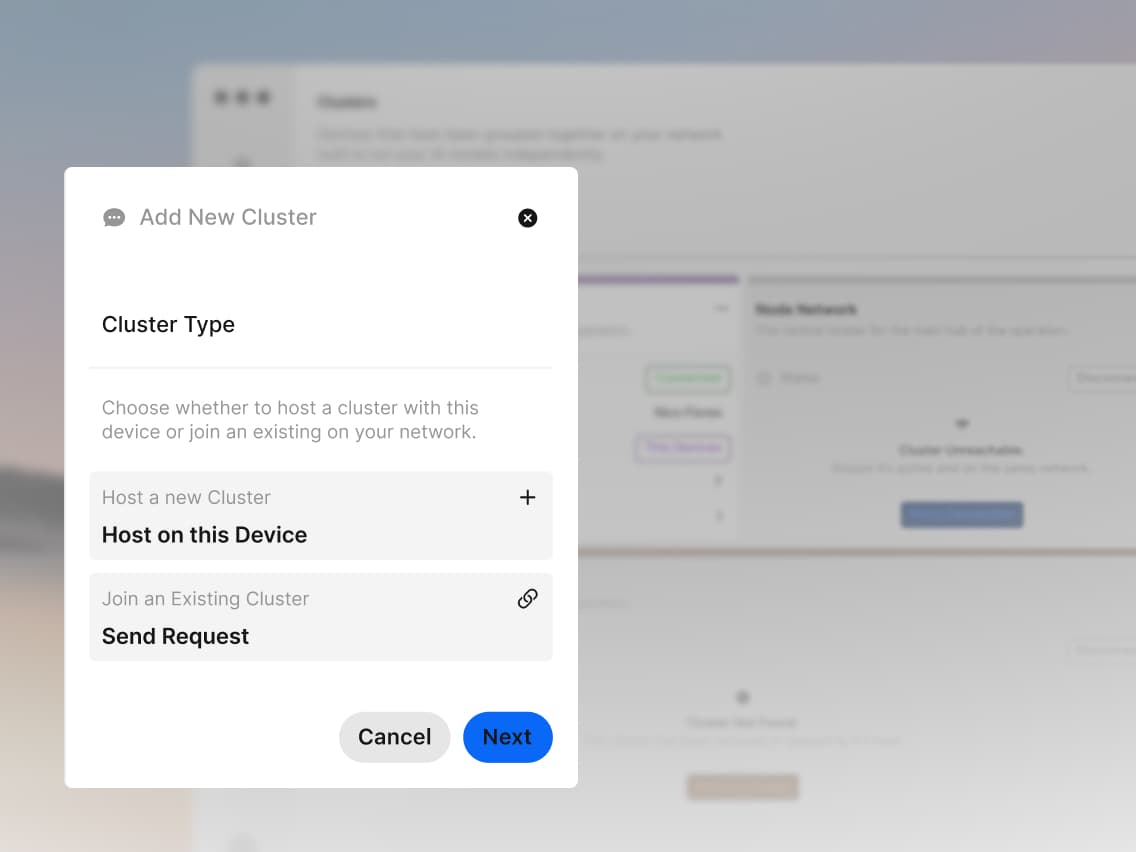

Runtime is the control layer that powers all webAI deployments. It manages how and where models run, allocating tasks across devices and clusters to keep utilization high and performance consistent. Whether executing one model on a single Mac or hundreds across a private network, Runtime ensures reliability, visibility, and sovereignty.

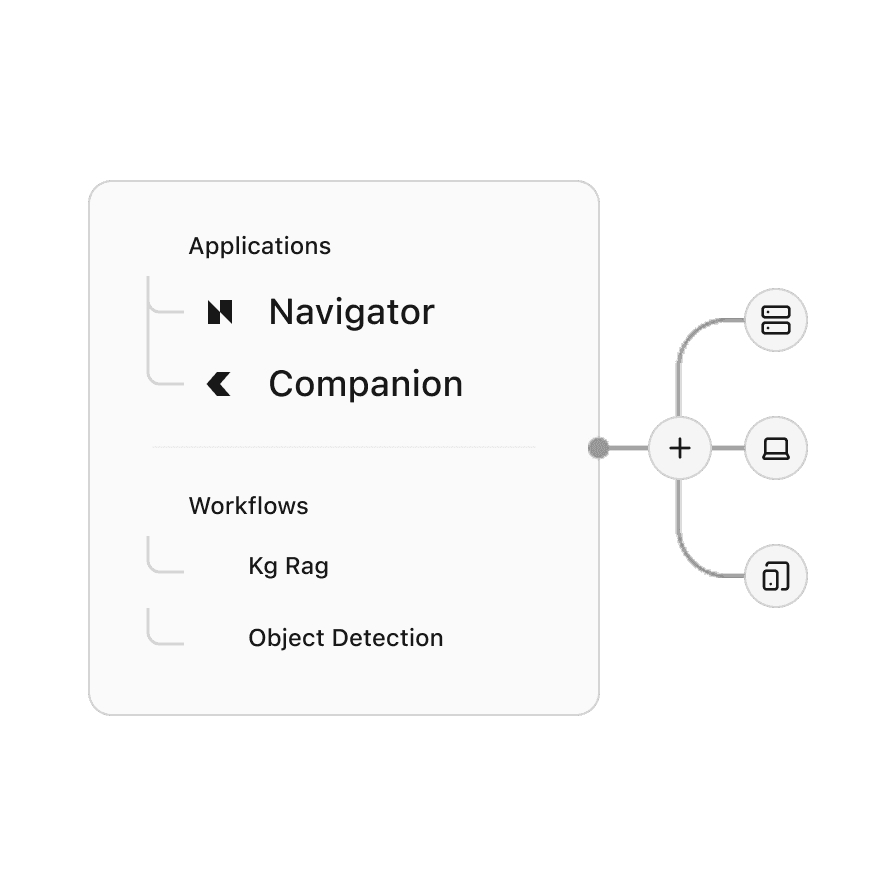

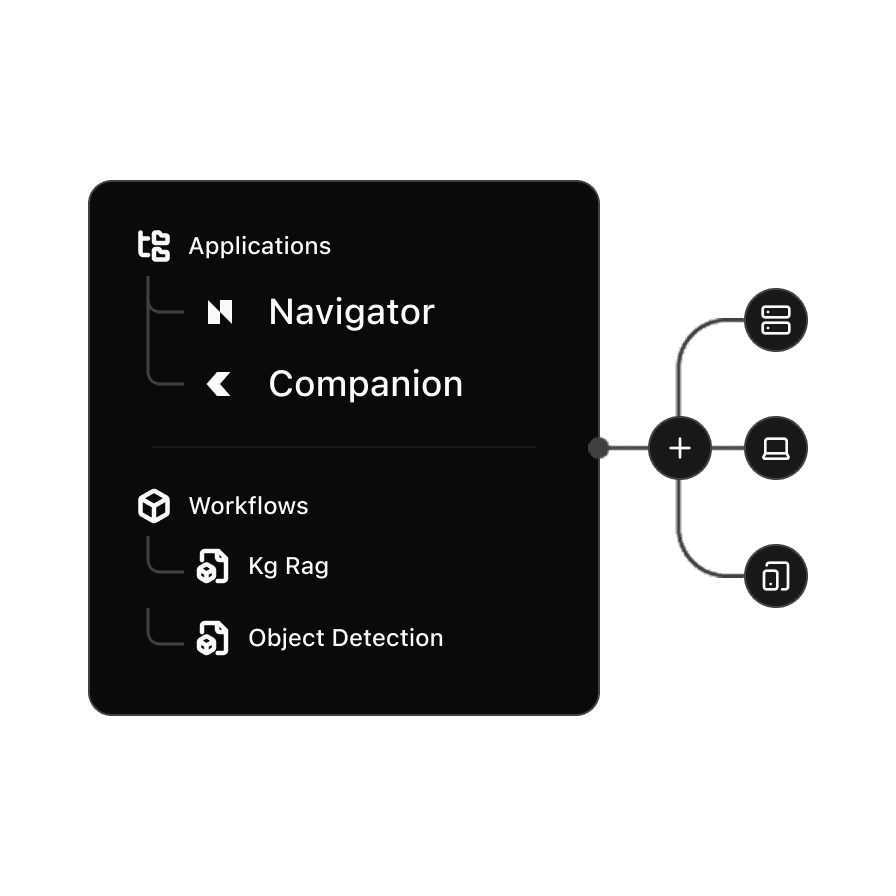

Distributed Orchestration

Automatically coordinates AI workloads across devices and clusters in heterogenous edge environments.

Seamless Scaling

Add or remove hardware dynamically; Runtime balances workloads to maintain peak efficiency.

Optimized for Mac

Works across the entire Apple hardware ecosystem.

Resilient by Design

Minimizes disruption when nodes go offline or workloads shift, with initial failover support in place.

Private and Secure

Runs locally within your network after setup, with verified dependencies and no external runtime calls.

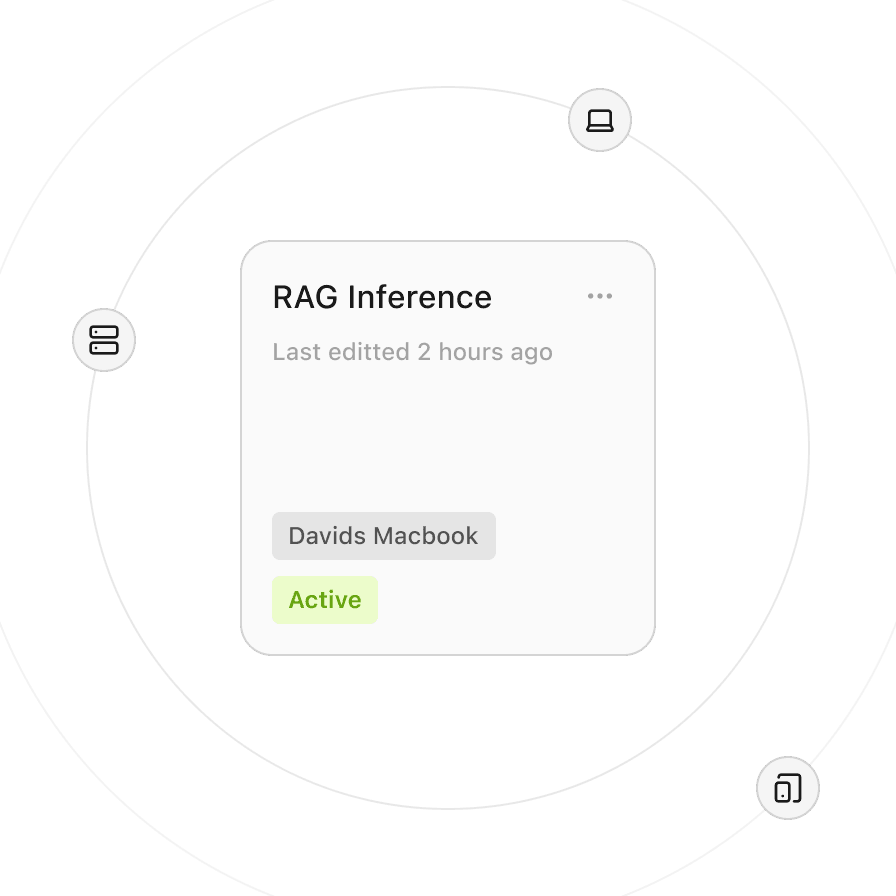

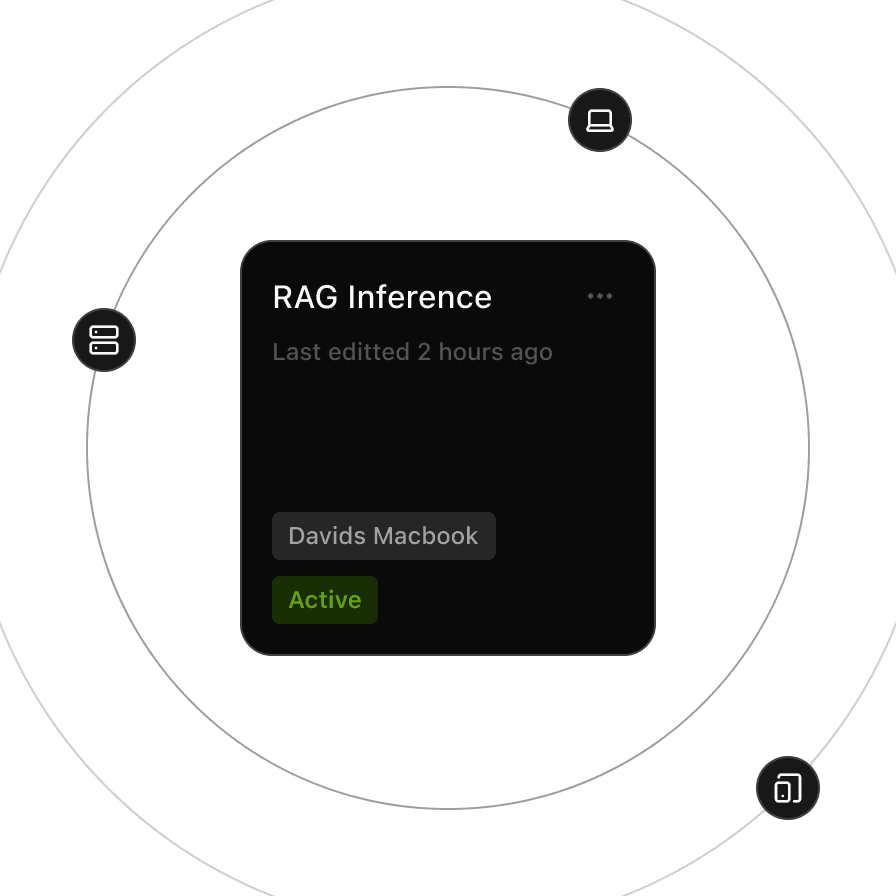

Inside Runtime the distributed systems layer that turns existing hardware into a cohesiveAI cluster.

Inside Runtime the distributed systems layer that turns existing hardware into a cohesiveAI cluster.

Automatically assigns workloads based on resource availability, latency, and performance metrics.

The webAI Platform

webAI brings every layer of sovereign AI into one system. From model creation to deployment and orchestration, explore how the pieces fit together to power custom, private AI across your organization.